Lilli Thompson from Google asked us how we were doing texture decompression in the pixel shaders and what algorithm we were using. We thought we would share our answer…

Texture compression was a bit of journey – as no one at Illyriad had ever implemented anything in 3d before; to us texture compression was mostly a tick box on a graphics card.

It started when we found out our 90MB of jpegs expanded to 2GB of on-board memory and we were worried we’d made a terrible mistake, as this was certainly beyond the possibilities of low-end hardware! Half of it this was due to Three.js keeping a reference to the image and the image also being copied to the GPU process – so essentially the required texture memory doubled.

Dropping the reference Three.js held after the texture was bound to WebGL resolved this. I’m not sure how this will play out with context lost events – as I assume we will have lost the texture at that point – but local caching in the file system and reloading may help with recreating them at speed.

With 1GB of memory remaining we were faced with three choices – either deciding what were were trying to do wasn’t possible; reducing the texture sizes and losing fidelity or trying to implement texture de-compression in the shader. Naturally we opted for the last.

We were originally planning to use 24bit S3TC/DX1; however this proved overly complex in the time we had available as the pixel shaders have no integer masking or bitshifts and everything needs to be worked in floats. The wonders we could unleash with binary operators and type casting (not conversion) – but I digress…

In the end we compromised on 256 colour pallettized textures (using AMD’s The Compressonator to generate P8 .DDS textures). This reduced the texture to one byte per pixel – not as small or high colour as DX1 – but already 4 times smaller than our original uncompressed RGBA textures.

It took a while to divine the file format; which we load via XMLHttpRequest into an arraybuffer. The files have 128 bytes of header which we ignore, followed by the 256×4 byte palette which we load into a lookup table texture RGBA. The rest we load into a Luminance texture. Both textures need to use NearestFilter sampling and not use mipmapping to be interpreted sensibly.

We have created our own compressed texture loaders – the colour texture loader looks a little like this:

[code lang=”javascript”]

Illyriad.TextureCompColorLoader = function (path, width, height, uniforms) {

var texture = new THREE.DataTexture(0, 1, 1, THREE.LuminanceFormat,

(new THREE.UVMapping()), THREE.RepeatWrapping, THREE.RepeatWrapping,

THREE.NearestFilter, THREE.NearestFilter);

var request = new XMLHttpRequest();

request.open("GET", path, true);

request.responseType = "arraybuffer";

// Decode asynchronously

request.onload = function () {

if (request.status == 200) {

var imageDataLength = request.response.byteLength – width * height;

uniforms.tColorLUT.texture = new THREE.DataTexture(

new Uint8Array(request.response, 128, 256 * 4),

256, 1, THREE.RGBAFormat, (new THREE.UVMapping()),

THREE.ClampToEdgeWrapping, THREE.ClampToEdgeWrapping,

THREE.NearestFilter, THREE.NearestFilter);

uniforms.tColorLUT.texture.needsUpdate = true;

texture.image = { data: new Uint8Array(request.response, imageDataLength),

width: width, height: height };

texture.needsUpdate = true;

}

}

request.send();

return texture;

}

[/code]

When we first did the decompression in the pixel shader, it was very blocky as we had turned off filtering to read the correct values from the texture. To get around this we had to add our own bilinearSample function to do the blending for us. In this function it uses the diffuse texture with the colour look up table and using the texture size and texture pixel interval samples the surrounding pixels. The other gotcha is that the lookup texture is in BGRA format so the colours need to be swizzeled. This makes that portion of the shader look like this:

[code]

uniform sampler2D tDiffuse;

uniform sampler2D tColorLUT;

uniform float uTextInterval;

uniform float uTextSize;

vec3 bilinearSample(vec2 uv, sampler2D indexT, sampler2D LUT)

{

vec2 tlLUT = texture2D(indexT, uv ).xx;

vec2 trLUT = texture2D(indexT, uv + vec2(uTextInterval, 0)).xx ;

vec2 blLUT = texture2D(indexT, uv + vec2(0, uTextInterval)).xx;

vec2 brLUT = texture2D(indexT, uv + vec2(uTextInterval , uTextInterval)).xx;

vec2 f = fract( uv.xy * uTextSize );

vec4 tl = texture2D(LUT, tlLUT).zyxw;

vec4 tr = texture2D(LUT, trLUT).zyxw;

vec4 bl = texture2D(LUT, blLUT).zyxw;

vec4 br = texture2D(LUT, brLUT).zyxw;

vec4 tA = mix( tl, tr, f.x );

vec4 tB = mix( bl, br, f.x );

return mix( tA, tB, f.y ).xyz;

}

void main()

{

vec4 colour = vec4(bilinearSample(vUv,tDiffuse,tColorLUT),1.0);

…

[/code]

This performs fairly well; and certainly better than when your computer feels some virtual memory is required because you are using too much! However, I’m sure on-board graphics card decompression should be swifter and hopefully open up the more complex S3TC/DX1-5 compression formats.

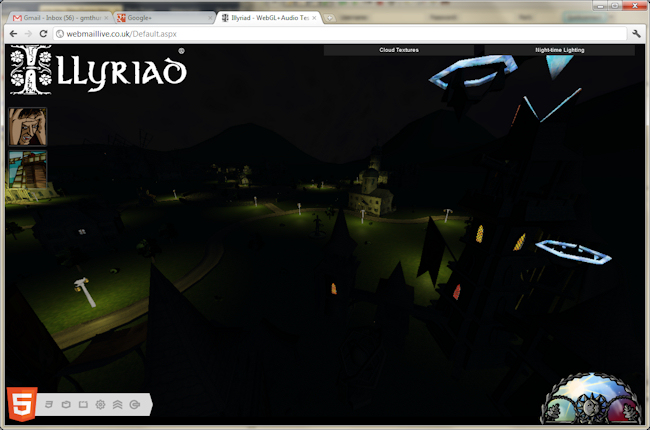

There is a major downside however with decompressing this way in the pixel shader. You have to turn off mipmapping! Not only does turning off mipmapping cause a performance hit as you always have to read the full-size textures – but more importantly it doesn’t look good. In fact in the demo – we had to use full-size textures for the grass so we could apply mipmapping as otherwise in the distance it was a wall of static!

Unfortunately, as far as I’m aware, WebGL while you can create mipmaps with generateMipmap – you can’t supply your own. Again, real compressed textures should help here.

EDIT: Benoit Jacob has pointed out this is possible by passing a non-zero ‘level’ parameter to texImage2D – one to look into.

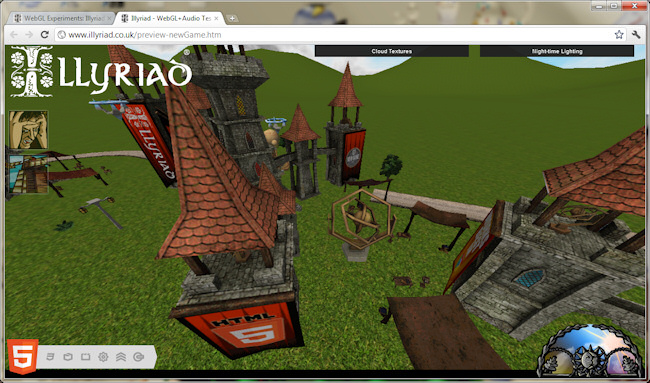

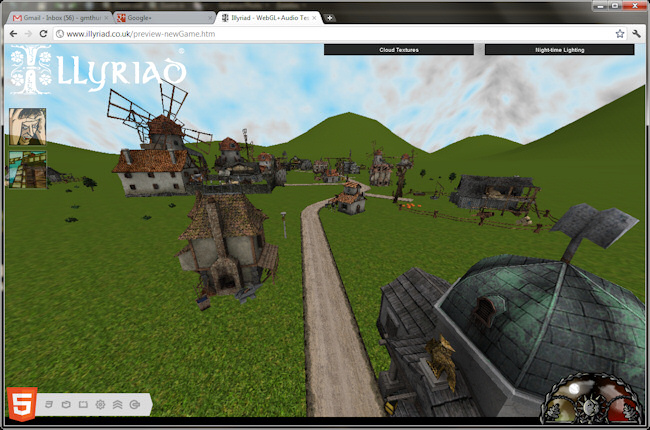

Some caveats on the demo:

- Obviously even 90MB of jpeg textures is far too much – the production version will be substantially smaller, as we are being a bit smarter on how we will be using them.

- This has been a learning process both for us and Quantic Arts (who are used to boxed set games).

- This was a tester to see the upper limits of what we can do in WebGL, so we haven’t been focusing on optimization yet.

- We will be reworking the obj models to reduce their download size substantially.

- The way the game works is that no one player will need all the textures at once (the time between queuing a building and it’s actual appearance in the game allows us to download the models/texture)

So the actual game requirements will be much much lower.

We will be mixing these in and out of the game environment dependent upon the map focus on biome. Some examples could be Mediterranean music, jungle music, desert music or polar music. We also plan to have some generic musical motifs and themes that enhance the game experience, such as triumphal themes that linger after you win a battle or trepidation themes that build anticipation before a diplomatic event such as sabotage or assassination. We are generally writing specific music for all of these themes as opposed to licencing them. We are also exploring the Web Audio API for this purpose – almost treating it as a sequencer – so that we can slave a standardized or fractional BPM to the game server clock and use all the clever tricks. For example, we may increase tempo of the music with the player’s proximity to the event outcome.

We will be mixing these in and out of the game environment dependent upon the map focus on biome. Some examples could be Mediterranean music, jungle music, desert music or polar music. We also plan to have some generic musical motifs and themes that enhance the game experience, such as triumphal themes that linger after you win a battle or trepidation themes that build anticipation before a diplomatic event such as sabotage or assassination. We are generally writing specific music for all of these themes as opposed to licencing them. We are also exploring the Web Audio API for this purpose – almost treating it as a sequencer – so that we can slave a standardized or fractional BPM to the game server clock and use all the clever tricks. For example, we may increase tempo of the music with the player’s proximity to the event outcome. These are the standardized sounds that occur during gameplay based on triggers such as event queuing or occurrences. For example, starting building construction in game with sound of carpenters sawing or the messenger noise you get when you receive an email. We’re looking at making some of these specific to the game races, so the new mail sound for receiving an in game message is different for Elves and Orcs. Some of these are being worked on currently by James Bell, our audio engineer. He is

These are the standardized sounds that occur during gameplay based on triggers such as event queuing or occurrences. For example, starting building construction in game with sound of carpenters sawing or the messenger noise you get when you receive an email. We’re looking at making some of these specific to the game races, so the new mail sound for receiving an in game message is different for Elves and Orcs. Some of these are being worked on currently by James Bell, our audio engineer. He is  Different from the motif music, these are specifically audio snippets (some looped, some one-off or randomly triggered) that are geo-specific, especially within the

Different from the motif music, these are specifically audio snippets (some looped, some one-off or randomly triggered) that are geo-specific, especially within the